Contra Marc Andreessen on AI

"The claim that you will completely control any system you build is obviously false, and a hacker like Marc should know that"

Marc Andreessen published a new essay about why AI will save the world. I had Marc on my podcast a few months ago (YouTube, Audio), and he was, as he is usually, very thoughtful and interesting. But in the case of AI, he fails to engage with the worries about AI misalignment. Instead, he substitutes aphorisms for arguments. He calls safety worriers cultists, questions their motives, and conflates their concerns with those of the woke “trust and safety” people.

I agree with his essay on a lot:

People grossly overstate the risks AI poses via misinformation and inequality.

Regulation is often counterproductive, and naively “regulating AI” is more likely to cause harm than good.

It would be really bad if China outpaces America in AI.

Technological progress throughout history has dramatically improved our quality of life. If we solve alignment, we can look forward to material and cultural abundance.

But Marc dismisses the concern that we may fail to control models, especially as they reach human level and beyond. And that’s where I disagree.

It’s just code

Marc writes:

My view is that the idea that AI will decide to literally kill humanity is a profound category error. AI is not a living being that has been primed by billions of years of evolution to participate in the battle for the survival of the fittest, as animals are, and as we are. It is math – code – computers, built by people, owned by people, used by people, controlled by people. The idea that it will at some point develop a mind of its own and decide that it has motivations that lead it to try to kill us is a superstitious handwave.

The claim that you will completely control any system you build is obviously false, and a hacker like Marc should know that. The Russian nuclear scientists who built the Chernobyl Nuclear Power Plant did not want it to meltdown, the biologists at the Wuhan Institute of Virology didn’t want to release a deadly pandemic, and Robert Morris didn’t want to take down the entire internet.

The difference this time is that the system under question is an intelligence capable (according to Marc’s own blog post) of advising CEOs and government officials, helping military commanders make “better strategic and tactical decisions”, and solving technical and scientific problems beyond our current grasp. What could go wrong?I just want to take a step back and ask Marc, or those who agree with him, what do you think happens as artificial neural networks get smarter and smarter? In the blog post, Marc says that these models will soon become loving tutors and coaches, frontier scientists and creative artists, that they will “take on new challenges that have been impossible to tackle without AI, from curing all diseases to achieving interstellar travel.”

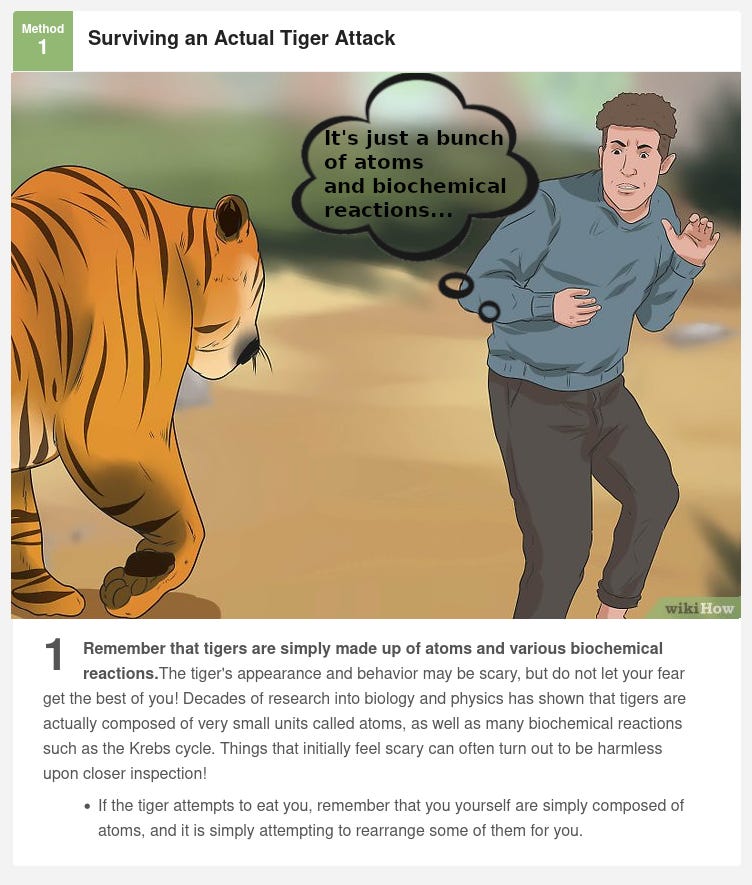

How does it do all this without developing something like a mind?1 Why do you think something so smart that it can solve problems beyond the grasp of human civilization will somehow totally be in your control? Why do you think creating a general intelligence just goes well by default?Saying that AI can’t be dangerous because it’s just math and code is like saying tigers can’t hurt you because they’re just a clump of biochemical reactions. Of course it’s just math! What else could it be but math? Marc complains later that AI worriers don’t have a falsifiable hypothesis, and I’ll address that directly in a second, but what is his falsifiable hypothesis? What would convince him AI can be dangerous? Would some wizard have to create a shape shifting intelligent goo in a cauldron? Because short of magic, any intelligence we build will be made of math and code.

As a side note, I wonder if Marc has tried to think through why the pioneers of deep learning (including multiple Turing award winners), the CEOs of all the big AI labs, and the researchers working most closely with advanced models think AI is an existential risk. Surely he doesn’t think he could just hand this essay to them, and they’d say, “Oh it’s just code! Why didn’t I think of that? Shit, what was I even worried about?”

This meme is from nearcyan on Twitter

AI doesn’t even need to have bad goals in order to be disastrously dangerous. It can simply pursue a goal we give it in a way we never intended. Even within human societies, optimizing on a seemingly banal goal has led to awful consequences (maximize equality -> communism causes uniform suffering and death, expand your nation’s sphere of control -> leaders start a war that kills millions of people).

With advanced AI, the situation is much worse. We’re talking about something that is potentially more powerful than any human, yet doesn’t start off with the hundreds of nuanced and inarticulable values embedded in human psychology as common sense. We laugh at toy examples, like “When tasked with solving depression, the AI develops an advanced opioid and hooks everyone up to an IV.” The AI is not doing this because it’s stupid, but because it doesn’t automatically have all of our contradictory and often counterbalancing values. We live in a world where the only intelligent optimizers are other humans that basically think like us and want similar kinds of things. So it becomes hard for us to imagine what it would be like to have a different type of mind come online, and how strange and destructive its methods for executing our commands might be.

The testable hypothesis

My response is that their position is non-scientific – What is the testable hypothesis? What would falsify the hypothesis? How do we know when we are getting into a danger zone? These questions go mainly unanswered apart from “You can’t prove it won’t happen!”

We’re already in a situation where Syndey Bing threatened to blackmail, bribe, and kill people (albeit in a cute and endearing way), and GPT-4 told a user how to manufacture biological weapons, and we’re still far from human level intelligence. Obviously, Sydney Bing can’t hurt you given its current capabilities, but do you feel confident that whatever caused it to go rogue won’t happen on a larger and more destructive scale with a model that is much smarter? If so, why?

A technologist and venture capitalist like Marc should be the most open to the possibility that there will be big unprecedented changes whose possibility we can’t immediately verify. When Marc created the first web browser in 1993, what was his immediately falsifiable thesis about the Internet?

Of course, you could just build GPT-8 and see if it does exactly what we want it to do in exactly the way we want it. We can then hope that gradient descent on predicting the next token somehow by default creates the drive to act in the best interest of humanity. Though evolution optimized our ancestors over billions of years to leave behind the most possible copies of our genes, and here we are using condoms and pulling out.We’re hastily putting together a new airplane. Its smaller prototypes sometimes fall out of the sky in weird ways that we don’t really understand. Would you like to be the test pilot?

I wonder if Marc actually has asked a bunch of people working on technical alignment whether they have a falsifiable hypothesis or if he’s just assuming that the answer must be no. I posted Marc’s question in a group chat, and just off the cuff, Tristan Hume, who works on interpretability alignment research at Anthropic, supplied the following list (edited for clarity):

I’d feel much better if we solved hallucinations and made models follow arbitrary rules in a way that nobody succeeded in red-teaming.

(in a way that wasn't just confusing the model into not understanding what it was doing).

I’d feel pretty good if we then further came up with and implemented a really good supervision setup that could also identify and disincentivize model misbehavior, to the extent where me playing as the AI couldn't get anything past the supervision. Plus evaluations that were really good at eliciting capabilities and showed smooth progress and only mildly superhuman abilities. And our datacenters were secure enough I didn't believe that I could personally hack any of the major AI companies if I tried.

I’d feel great if we solve interpretability to the extent where we can be confident there's no deception happening, or develop really good and clever deception evals, or come up with a strong theory of the training process and how it prevents deceptive solutions.

The only thing that can stop a bad guy with an LLM

In short, AI doesn’t want, it doesn’t have goals, it doesn’t want to kill you, because it’s not alive. And AI is a machine – is not going to come alive any more than your toaster will.

This argument that AI is just like a toaster contradicts the entire rest of the essay, which argues that AI will be so powerful that it will save the world. The very reason why AI will be capable of creating stupendous benefits for humanity - if we get it right - is that it is much more powerful than any other technology we have built to date. If it was just like a toaster, it couldn’t “make everything we care about better.”

Even if it does exactly what a user wants it to do, how do you ensure that GPT-7 doesn’t teach a terrorist how to synthesize and transport a few dozen new deadly infectious diseases? Are you saying we could prevent such output? If so, what techniques for reliably controlling GPT-7’s behavior have you discovered which OpenAI would surely pay you a fortune to reveal? And even if you had such a technique (I’m sure they exist in principle - otherwise alignment would be hopeless), how do you make sure everyone who builds advanced models implements them? After all, Marc advocated against mandated evaluations, standards, and other regulations at the end of the essay.

Later, he writes:

I said we should focus first on preventing AI-assisted crimes before they happen – wouldn’t such prevention mean banning AI? Well, there’s another way to prevent such actions, and that’s by using AI as a defensive tool. The same capabilities that make AI dangerous in the hands of bad guys with bad goals make it powerful in the hands of good guys with good goals – specifically the good guys whose job it is to prevent bad things from happening.

The tech tree of synthetic biology or cyber-warfare isn’t necessarily organized according to catchy aphorisms. How exactly does a good guy with a bioweapon stop a bad guy with a bioweapon? While we might hope that new technology must always help defense more than offense, there’s no metaphysical principle that says it must be so.

In fact, even in theory, offense would be more advantaged. Rockets are much easier to build than missile defense systems. Synthesizing and transporting a single new virus into the US is much easier than implementing metagenomic sequencing across every single airport, harbor, and border crossing (official and unofficial).

The reason a terrorist hasn’t done something 100x worse than 9/11 is not because the tech tree has in principle ruled out such attacks (in that our defense has progressed faster than their offense). Rather, there just aren’t that many very smart people who want to cause mass destruction. I don’t know what happens when only a modestly smart person can ask a chatbot for the recipe to cause 1 million deaths, but I see no reason to be confident that the outcome will be positive.

Regulation

This causes some people to propose, well, in that case, let’s not take the risk, let’s ban AI now before this can happen. Unfortunately, AI is not some esoteric physical material that is hard to come by, like plutonium. It’s the opposite, it’s the easiest material in the world to come by – math and code.

The AI cat is obviously already out of the bag. You can learn how to build AI from thousands of free online courses, books, papers, and videos, and there are outstanding open source implementations proliferating by the day. AI is like air – it will be everywhere. The level of totalitarian oppression that would be required to arrest that would be so draconian – a world government monitoring and controlling all computers? jackbooted thugs in black helicopters seizing rogue GPUs? – that we would not have a society left to protect.

Regulation may not be the answer, and may indeed make things worse (as in my view it has done in many other areas). I also worry that regulations will ban naughty thoughts in the name of “AI safety” in a way that does nothing about the larger alignment problem. But dismissing the legitimate worries about AI risk makes this more likely, not less. After all, if people think that “making GPT-4 woke” and “making sure GPT-7 doesn’t release a bioweapon” are the same thing, and we want to make sure GPT-7 doesn’t release a bioweapon, then I guess we gotta make GPT-4 woke!

The best LLMs today require 100s of millions of dollars to train, and something close to human level will probably take billions of dollars using the current approach. The idea that we’ll need to seize the 3070s in every teenager’s gaming rig in order to evaluate and monitor the most advanced models is obviously ridiculous. Identifying and monitoring training runs that require 10s of 1000s of the newest server GPUs will require no more totalitarian oppression than the International Atomic Energy Agency requires to keep track of all of the world’s nukes.

You cannot argue, as Marc has repeatedly done, that regulations are so destructive that they have paralyzed entire sectors of the economy (like energy, medicine, education, law, and finance), and at the same time argue that powerful technologies are so inevitable that trying to constrain them would be hopeless anyways. Regulations either have effects (good or bad), or they don’t.

Motives

In fact, these Baptists’ position is so non-scientific and so extreme – a conspiracy theory about math and code – and is already calling for physical violence, that I will do something I would normally not do and question their motives as well.

Specifically, I think three things are going on:

First, recall that John Von Neumann responded to Robert Oppenheimer’s famous hand-wringing about his role creating nuclear weapons – which helped end World War II and prevent World War III – with, “Some people confess guilt to claim credit for the sin.” What is the most dramatic way one can claim credit for the importance of one’s work without sounding overtly boastful? …

Second, some of the Baptists are actually Bootleggers. There is a whole profession of “AI safety expert”, “AI ethicist”, “AI risk researcher”. They are paid to be doomers, and their statements should be processed appropriately.

Third, California is justifiably famous for our many thousands of cults, … And the reality, which is obvious to everyone in the Bay Area but probably not outside of it, is that “AI risk” has developed into a cult, which has suddenly emerged into the daylight of global press attention and the public conversation. This cult has pulled in not just fringe characters, but also some actual industry experts and a not small number of wealthy donors – including, until recently, Sam Bankman-Fried. And it’s developed a full panoply of cult behaviors and beliefs…

It turns out that this type of cult isn’t new – there is a longstanding Western tradition of millenarianism, which generates apocalypse cults. The AI risk cult has all the hallmarks of a millenarian apocalypse cult.

Marc is right here - the original AI safety thinkers are weirdos, the “AI ethics” people are mostly grifters, and hyping up AI risk may plausibly be a way to give your AI work a larger significance in the grand scheme of things.

So what? This is obvious ad hominem - the idiosyncrasies of the people who develop a set of ideas are irrelevant to the debate about those ideas themselves. Every group of people that has done something important in history has been weird. Normal people usually don’t do cool shit. Are you throwing away your math textbooks because many of history’s greatest mathematicians were mentally ill? Will you smash your iPhone because the people who started the IT revolution were eccentric, autistic, and culty?

It would be intellectually dishonest of me to write a section about how Marc is arguing against AI worriers because he wants to discourage regulations and industry standards that would harm his investments. I’m confident Marc genuinely believes what he says, but even if I’m wrong, who cares? The debate must always be about the ideas, not the people.

Oppenheimer was correct that he was involved in a project that changed the course of human history. Maybe Marc is right in that Oppenheimer was trying to aggrandize himself. Again, so what?

If we’re going to play a game of guilt by affiliation with Sam Bankman-Fried, might I point out that one the world’s largest crypto investors probably has fewer degrees of separation between themselves and Sam than a Turing award winner? To be clear, I think it would be ridiculous to say that SBF is a stain on Marc, but it’s even more bizarre to name drop SBF to dismiss AI risk worriers.

China

China has a vastly different vision for AI than we do – they view it as a mechanism for authoritarian population control, full stop. They are not even being secretive about this, they are very clear about it, and they are already pursuing their agenda. And they do not intend to limit their AI strategy to China – they intend to proliferate it all across the world, everywhere they are powering 5G networks, everywhere they are loaning Belt And Road money, everywhere they are providing friendly consumer apps like Tiktok that serve as front ends to their centralized command and control AI.

The single greatest risk of AI is that China wins global AI dominance and we – the United States and the West – do not.

I propose a simple strategy for what to do about this – in fact, the same strategy President Ronald Reagan used to win the first Cold War with the Soviet Union.

A world in which the CCP develops AGI first is a very bad world. But a lot of the harm from China developing AI first comes from the fact they probably will give no consideration to alignment issues. So if we developed AI in the same cavalier attitude in an effort to beat them, and humanity’s future is thwarted, what have we gained? Thankfully we have a lead on China right now. We should maintain that lead and use it to dramatically increase investment in technical alignment.

I don’t understand how we get from “Develop AI tech faster” to “China is prevented from abusing AI”. Do we use AI to fight a war against the CCP that takes them out of power and prevents an internal Chinese panopticon? If not, how exactly does the pace of AI progress in America change about what the CCP will do in China?

At the end of the essay, Marc advocates for free proliferation of open source AI. If the real threat from AI is that China catches up to American companies, then surely this is a bad idea. When we were competing with the Soviet Union, we wouldn’t have allowed our advanced weapons technology to be leaked to the enemy. If AI is the most powerful tool in a conflict with China, then shouldn’t we be similarly worried about sharing AI secrets with China through open source?

Note that in terms of concrete ideas, there’s a lot of overlap between China hawks and AI safety worriers: They both want to prevent China from hacking into the AI labs and stealing weights. And I’m sure AI safety worriers were quite pleased by Biden’s restrictions on China’s access to advanced semiconductors and the equipment used to make them. Export controls can harm China’s ability to compete with us on AI without increasing the risk from more advanced models.

AI isn’t social media

People like Marc are still pretty hung up on the social media culture war. He had a front row seat to this battle for over a decade. Marc is on the board of Meta, and his firm a16z was both an early investor in the original Twitter and now in Elon’s takeover. Especially since 2016, there has been a huge moral panic around misinformation, hate speech, and algorithmic bias. Social media companies did not stand their ground and explain why these concerns were exaggerated or misplaced (at least until recently). Instead, they repeatedly conceded to the demands of activists, governments, and the media.

It seems Marc wants to draw a line in the sand this time - we will not give in to your hysterical demands to control our technology. But a website where you post life updates, memes, and news articles is just not the same thing as a steadily improving general intelligence capable of doing science and tech. And you’re missing the story if you insist on thinking about the development of this current infant alien intelligence using your decade old culture war lens.

Imagine the first hunter gatherers to plant some seeds across a patch of land. In the grand scale of human history, the creation of AI is as significant as the agricultural revolution. Forcing your perspective on AI through the current “trust and safety” wars is like the proto-agriculturalists killing each other over whether they dedicate their first harvest to the tree spirit or the fire deity.

Thanks to Sholto Douglas, Agustin Lebron, Asara Near, Trevor Chow, and Tristan Hume for comments.

How does it cure cancer, or even help cure cancer, without building a model of how cancer works, then developing subgoals like “Study pathway x”, then creating and executing plans like “Use experimental design y to study pathway x”? And once GPT-7 is able to create and execute plans, why are you so sure that these plans will all be about curing cancer and nothing else?

I wasn’t expecting this (thats on me); bullet proof counter arguments and factual takedowns. Brilliant essay.

> We’re talking about something that is potentially more powerful than any human

What makes you claim that? AI doomers and safetyists are wrong assuming that the current LLM/RL paradigm will exponentially accelerate into something: creative, autonomous, disobedient and open-ended. The "AGI via Scaling Laws" claim is flawed for similar reasons (see my critique here: https://scalingknowledge.substack.com/i/124877999/scaling-laws).